Yay. GRDDL is now a W3C Recommendation!

I'm very proud to be a part of that and there is alot about this particular architectural style that I have always wanted to write about. I recently came upon the opportunity to consider one particular facet.

This is why it seems the same as with GRDDL. There are tranformations you can make, but they are not entailments in a logic, they just go from one graph to a different graph.

- Sandro Hawke on W3C Rules Interchange Format Working Group (Tue, 18 Sep 2007)

Yes, that is one part of the larger framework that is well considered. GRDDL does not rely on logical entaiment for its normative definition. It is defined operationally, but can also be described via declarative (formal) semantics. It defines a mapping (not a function in the true sense - the specification clearly identifies ambiguity at the level of the infoset) from an XML representation of an "information resource" to a typed RDF representation of the same "information resource". The output is required to have a well-defined mapping of its own into the RDF abstract syntax.

The less formal definition uses a dialect of Notation 3 that is a bit more expressive than Datalog Logic Programming (it uses function symbols - builtins - in some of the clauses ). The proof at the bottom of that page justifies the assertion that http://www.w3.org/2001/sw/grddl-wg/td/titleauthor.html has a GRDDL result which is composed entirely of the following RDF statement:

<http://musicbrainz.org/mm-2.1/album/6b050dcf-7ab1-456d-9e1b-c3c41c18eed2> is named "Are You Experienced?" .

Frankly, I would have gone with "Bold as Love", myself =)

Once you have a (mostly) well-defined function for rendering RDF from information resources, you enable the deployment of useful ( and re-usable ) interpretations for intelligent agents (more on these later). For example, the test suite, is a large semantic web of XML documents that GRDDL-aware agents can traverse, performing Quality Assurance tests (using EARL) of their conformance to the operational semantics of GRDDL.

However, it was very important to leave entailment out of the equation until it serves a justifiable purpose. For example, a well-designed RDF querying language does not require logical entailment (RDF, RDFS, OWL, or otherwise) for it to be useful in the general case. You can calculate a closure (or Herbrand base) and then dispatch structural graph queries. This was always true with Versa. You can glean (pun intended) quite a bit from only the structural nature of a Graph. A whole generation of graph theoretical literature demonstrates this.

In addition, once you have a well-defined set of semantics for identifying information resources with RDF assertions that are (logically) relevant to the closure, you have a clear seperation between manipulation of surface syntax and full-blown logical reasoning systems.

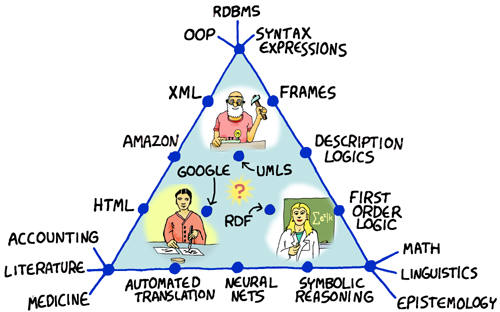

It should be considered a semantic web architectural style (if you will) to constrain the use of entailment to only where it has some demonstrated value to the problem space. Where it makes sense to use entailment, however, you will find the representations are well-engineered for the task.

My favorite initial read on the subject is

My favorite initial read on the subject is